kubernetes 1.13.4升级到1.14.0

结论:不支持跨版本升级,只能1.13.5->1.14.0

变化较大

1.14.0已放出:https://github.com/kubernetes/kubernetes/releases/tag/v1.14.0

变化见:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.14.md#v1140

官方升级文档:https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade-1-14/

以下为尝试失败过程:

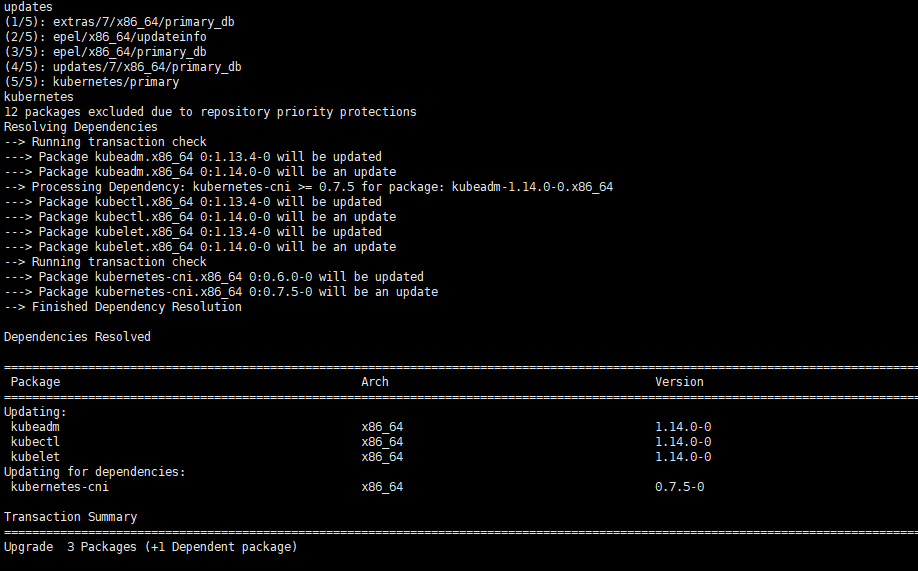

升级kubeadm

先上代理:

export http_proxy='http://192.168.2.2:1080'

export https_proxy='http://192.168.2.2:1080'

export ftp_proxy='http://192.168.2.2:1080'

在各节点上都升级下kubelet kubeadm kubectl:

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

kubeadm已经无法升级了:

[root@node01 ~]# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.13.4

[upgrade/versions] kubeadm version: v1.14.0

Awesome, you're up-to-date! Enjoy!

强制升级试试,先拉取1.14.0镜像

#科学取包

docker pull gcr.io/google-containers/kube-apiserver:v1.14.0

docker pull gcr.io/google-containers/kube-scheduler:v1.14.0

docker pull gcr.io/google-containers/kube-proxy:v1.14.0

docker pull gcr.io/google-containers/kube-controller-manager:v1.14.0

docker pull gcr.io/google-containers/etcd:3.2.26

docker pull gcr.io/google-containers/etcd:3.3.10

docker pull gcr.io/google-containers/pause:3.1

docker pull gcr.io/google-containers/coredns:1.3.1

#搬运回来

docker save gcr.io/google-containers/kube-proxy:v1.14.0 >kube-proxy

docker save gcr.io/google-containers/kube-scheduler:v1.14.0 >kube-scheduler

docker save gcr.io/google-containers/kube-controller-manager:v1.14.0 >kube-controller-manager

docker save gcr.io/google-containers/kube-apiserver:v1.14.0 >kube-apiserver

docker save gcr.io/google-containers/etcd:3.2.26 >etcd3226

docker save gcr.io/google-containers/coredns:1.3.1 >coredns

docker save gcr.io/google-containers/etcd:3.3.10 >etcd3310

docker save gcr.io/google-containers/pause:3.1 >pause

docker load < kube-proxy

docker load < kube-scheduler

docker load < kube-controller-manager

docker load < kube-apiserver

docker load < etcd3226

docker load < coredns

docker load < etcd3310

docker load < pause

#tag

docker tag gcr.io/google-containers/kube-apiserver:v1.14.0 k8s.gcr.io/kube-apiserver:v1.14.0

docker tag gcr.io/google-containers/kube-scheduler:v1.14.0 k8s.gcr.io/kube-scheduler:v1.14.0

docker tag gcr.io/google-containers/kube-proxy:v1.14.0 k8s.gcr.io/kube-proxy:v1.14.0

docker tag gcr.io/google-containers/kube-controller-manager:v1.14.0 k8s.gcr.io/kube-controller-manager:v1.14.0

docker tag gcr.io/google-containers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag gcr.io/google-containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag gcr.io/google-containers/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

强制升级到1.14.0会失败回滚,查看文档发现不支持跳版本升级

You only can upgrade from one MINOR version to the next MINOR version, or between PATCH versions of the same MINOR. That is, you cannot skip MINOR versions when you upgrade. For example, you can upgrade from 1.y to 1.y+1, but not from 1.y to 1.y+2.

看一下1.13.5组件情况

先降级下kubeadm kubelet kubectl

yum remove -y kubeadm kubeadm* kubelet kubelet* kubectl kubectl*

yum list --showduplicates kubeadm --disableexcludes=kubernetes #1.13.5-0

yum list --showduplicates kubelet --disableexcludes=kubernetes #1.13.5-0

yum list --showduplicates kubectl --disableexcludes=kubernetes #1.13.5-0

yum install -y kubeadm-1.13.5-0 --disableexcludes=kubernetes

yum install -y kubelet-1.13.5-0 --disableexcludes=kubernetes

yum install -y kubectl-1.13.5-0 --disableexcludes=kubernetes

查看组件版本:

[root@node01 OpenResty]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.5", GitCommit:"2166946f41b36dea2c4626f90a77706f426cdea2", GitTreeState:"clean", BuildDate:"2019-03-25T15:24:33Z", GoVersion:"go1.11.5", Compiler:"gc", Platform:"linux/amd64"

[root@node01 OpenResty]# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.13.4

[upgrade/versions] kubeadm version: v1.13.5

I0327 04:16:41.500324 74049 version.go:237] remote version is much newer: v1.14.0; falling back to: stable-1.13

[upgrade/versions] Latest stable version: v1.13.5

[upgrade/versions] Latest version in the v1.13 series: v1.13.5

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 4 x v1.13.4 v1.13.5

Upgrade to the latest version in the v1.13 series:

COMPONENT CURRENT AVAILABLE

API Server v1.13.4 v1.13.5

Controller Manager v1.13.4 v1.13.5

Scheduler v1.13.4 v1.13.5

Kube Proxy v1.13.4 v1.13.5

CoreDNS 1.2.6 1.2.6

Etcd 3.2.24 3.2.24

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.13.5

_____________________________________________________________________

}

先取一下1.13.5的镜像

#科学取包

docker pull gcr.io/google-containers/kube-apiserver:v1.13.5

docker pull gcr.io/google-containers/kube-scheduler:v1.13.5

docker pull gcr.io/google-containers/kube-proxy:v1.13.5

docker pull gcr.io/google-containers/kube-controller-manager:v1.13.5

docker save gcr.io/google-containers/kube-proxy:v1.13.5 >kube-proxy

docker save gcr.io/google-containers/kube-scheduler:v1.13.5 >kube-scheduler

docker save gcr.io/google-containers/kube-controller-manager:v1.13.5 >kube-controller-manager

docker save gcr.io/google-containers/kube-apiserver:v1.13.5 >kube-apiserver

#在各节点上导入

docker load < kube-proxy

docker load < kube-scheduler

docker load < kube-controller-manager

docker load < kube-apiserver

#打标记

docker tag gcr.io/google-containers/kube-apiserver:v1.13.5 k8s.gcr.io/kube-apiserver:v1.13.5

docker tag gcr.io/google-containers/kube-scheduler:v1.13.5 k8s.gcr.io/kube-scheduler:v1.13.5

docker tag gcr.io/google-containers/kube-proxy:v1.13.5 k8s.gcr.io/kube-proxy:v1.13.5

docker tag gcr.io/google-containers/kube-controller-manager:v1.13.5 k8s.gcr.io/kube-controller-manager:v1.13.5

升级到1.13.5

[root@node01 ~]# kubeadm upgrade apply v1.13.5

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade/apply] Respecting the --cri-socket flag that is set with higher priority than the config file.

[upgrade/version] You have chosen to change the cluster version to "v1.13.5"

[upgrade/versions] Cluster version: v1.13.4

[upgrade/versions] kubeadm version: v1.13.5

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 2 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 2 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.13.5"...

Static pod: kube-apiserver-node01 hash: 68dbc8c884254ade7e14813983726da4

Static pod: kube-controller-manager-node01 hash: 4f902c23e8a849a380013b00c18b95a9

Static pod: kube-scheduler-node01 hash: 24153a858e6f398bb1873c49d68c7f3a

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests151842332"

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-14-04/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-node01 hash: 68dbc8c884254ade7e14813983726da4

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

[apiclient] Found 3 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-14-04/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-node01 hash: 4f902c23e8a849a380013b00c18b95a9

Static pod: kube-controller-manager-node01 hash: 4f902c23e8a849a380013b00c18b95a9

Static pod: kube-controller-manager-node01 hash: 186873e78ae3e8982ee0ad18eb81cbfc

[apiclient] Found 3 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-14-04/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-node01 hash: 24153a858e6f398bb1873c49d68c7f3a

Static pod: kube-scheduler-node01 hash: 8cea5badbe1b177ab58353a73cdedd01

[apiclient] Found 3 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "node01" as an annotation

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.13.5". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

升级后:

[root@node01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.5", GitCommit:"2166946f41b36dea2c4626f90a77706f426cdea2", GitTreeState:"clean", BuildDate:"2019-03-25T15:24:33Z", GoVersion:"go1.11.5", Compiler:"gc", Platform:"linux/amd64"}

以下为1.13.5升级-1.14.0过程:

升级kubeadm:

export http_proxy='http://192.168.2.2:1080'

export https_proxy='http://192.168.2.2:1080'

export ftp_proxy='http://192.168.2.2:1080'

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

[root@node01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:51:21Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

[root@node01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:51:21Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

[root@node01 ~]# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.13.5

[upgrade/versions] kubeadm version: v1.14.0

Awesome, you're up-to-date! Enjoy!

还是发现不了?

重新强制升级1.14.0

[root@node01 ~]# kubeadm upgrade apply v1.14.0

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade/version] You have chosen to change the cluster version to "v1.14.0"

[upgrade/versions] Cluster version: v1.13.5

[upgrade/versions] kubeadm version: v1.14.0

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.14.0"...

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

Static pod: kube-controller-manager-node01 hash: 186873e78ae3e8982ee0ad18eb81cbfc

Static pod: kube-scheduler-node01 hash: 8cea5badbe1b177ab58353a73cdedd01

[upgrade/etcd] Upgrading to TLS for etcd

Static pod: etcd-node01 hash: c4893443e33aea95ea4adad12f1e103f

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/etcd.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-54-00/etcd.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: etcd-node01 hash: c4893443e33aea95ea4adad12f1e103f

Static pod: etcd-node01 hash: c4893443e33aea95ea4adad12f1e103f

Static pod: etcd-node01 hash: 4867e51eb4891975cd81b22ac94c2220

[apiclient] Found 3 Pods for label selector component=etcd

[apiclient] Found 2 Pods for label selector component=etcd

[upgrade/staticpods] Component "etcd" upgraded successfully!

[upgrade/etcd] Waiting for etcd to become available

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests150531497"

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-54-00/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

Static pod: kube-apiserver-node01 hash: 3da60c731cd24e5d4c25cefc26c2bdb2

Static pod: kube-apiserver-node01 hash: 5e3444aa15c18e29c7476a1efc770c3e

[apiclient] Found 3 Pods for label selector component=kube-apiserver

[apiclient] Found 2 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-54-00/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-node01 hash: 186873e78ae3e8982ee0ad18eb81cbfc

Static pod: kube-controller-manager-node01 hash: 0ff88c9b6e64cded3762e51ff18bce90

[apiclient] Found 3 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-03-27-06-54-00/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-node01 hash: 8cea5badbe1b177ab58353a73cdedd01

Static pod: kube-scheduler-node01 hash: 58272442e226c838b193bbba4c44091e

[apiclient] Found 3 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.14.0". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.