kube-state-metrics+prometheus+grafana监控kubernetes

namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: monitor

prometheus-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

namespace: monitor

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitor

prometheus-config-kubernetes.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitor

data:

prometheus.yml: |

global:

scrape_configs:

- job_name: 'kubernetes-kubelet'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc.cluster.local:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc.cluster.local:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-kube-state'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]

regex: .*true.*

action: keep

- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']

regex: 'node-exporter;(.*)'

action: replace

target_label: nodename

prometheus-Deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

name: prometheus

name: prometheus

namespace: monitor

spec:

replicas: 1

template:

metadata:

labels:

app: prometheus-server

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: functions/prometheus

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-prometheus

imagePullSecrets:

- name: authllzg

volumes:

- name: config-prometheus

configMap:

name: prometheus-config

prometheus-Service.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus-ingress-service

namespace: monitor

spec:

selector:

app: prometheus-server

ports:

- protocol: TCP

port: 9090

name: prom

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prom-web-ui

namespace: monitor

spec:

rules:

- host: prometheus.test.net

http:

paths:

- path: /

backend:

serviceName: prometheus-ingress-service

servicePort: prom

state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: monitor

name: kube-state-metrics-resizer

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: monitor

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

namespace: monitor

rules:

- apiGroups: [""]

resources:

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

namespace: monitor

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitor

kube-state-metrics-Deployment.yaml

{

"apiVersion": "apps/v1beta1",

"kind": "Deployment",

"metadata": {

"name": "kube-state-metrics",

"namespace": "monitor"

},

"spec": {

"selector": {

"matchLabels": {

"k8s-app": "kube-state-metrics",

"grafanak8sapp": "true"

}

},

"replicas": 1,

"template": {

"metadata": {

"labels": {

"k8s-app": "kube-state-metrics",

"grafanak8sapp": "true"

}

},

"spec": {

"serviceAccountName": "kube-state-metrics",

"containers": [

{

"name": "kube-state-metrics",

"image": "ist0ne/kube-state-metrics",

"ports": [

{

"name": "http-metrics",

"containerPort": 8080

}

],

"readinessProbe": {

"httpGet": {

"path": "/healthz",

"port": 8080

},

"initialDelaySeconds": 5,

"timeoutSeconds": 5

}

}

],

"imagePullSecrets": [

{

"name": "authllzg"

}

]

}

}

}

}

node-exporter.yaml

{

"kind": "DaemonSet",

"apiVersion": "extensions/v1beta1",

"metadata": {

"name": "node-exporter",

"namespace": "monitor"

},

"spec": {

"selector": {

"matchLabels": {

"daemon": "node-exporter",

"grafanak8sapp": "true"

}

},

"template": {

"metadata": {

"name": "node-exporter",

"labels": {

"daemon": "node-exporter",

"grafanak8sapp": "true"

}

},

"spec": {

"volumes": [

{

"name": "proc",

"hostPath": {

"path": "/proc"

}

},

{

"name": "sys",

"hostPath": {

"path": "/sys"

}

}

],

"containers": [

{

"name": "node-exporter",

"image": "prom/node-exporter:v0.15.0",

"args": [

"--path.procfs=/proc_host",

"--path.sysfs=/host_sys"

],

"ports": [

{

"name": "node-exporter",

"hostPort": 9100,

"containerPort": 9100

}

],

"volumeMounts": [

{

"name": "sys",

"readOnly": true,

"mountPath": "/host_sys"

},

{

"name": "proc",

"readOnly": true,

"mountPath": "/proc_host"

}

],

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"hostNetwork": true,

"hostPID": true

}

}

}

}

grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: "30Gi"

volumeName:

storageClassName: nfs

grafana-Deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

name: grafana-server

name: grafana

namespace: monitor

spec:

replicas: 1

template:

metadata:

labels:

app: grafana-server

spec:

serviceAccountName: prometheus

containers:

- name: grafana

image: grafana/grafana:latest

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: "/var/lib/grafana"

readOnly: false

name: grafana-pvc

#env:

#- name: GF_INSTALL_PLUGINS

# value: "grafana-kubernetes-app"

imagePullSecrets:

- name: authllzg

volumes:

- name: grafana-pvc

persistentVolumeClaim:

claimName: grafana-pvc

grafana-service.yaml

kind: Service

apiVersion: v1

metadata:

name: grafana-ingress-service

namespace: monitor

spec:

selector:

app: grafana-server

ports:

- protocol: TCP

port: 3000

name: grafana

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitor

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: monitor.test.net

http:

paths:

- path: /

backend:

serviceName: grafana-ingress-service

servicePort: grafana

配置

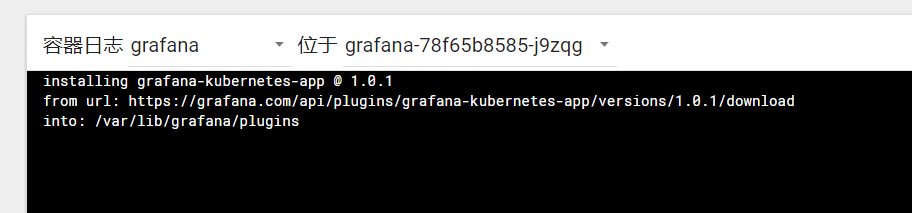

访问 http://monitor.test.net

发现报502,grafana没有启动,日志如下:

安装插件超时,注释掉env的GF_INSTALL_PLUGINS后重启

默认用户名是admin,密码是admin,第一次登录需要改密码

安装插件,直接将插件解压在pvc里面,重建pod:

https://codeload.github.com/grafana/kubernetes-app/legacy.zip

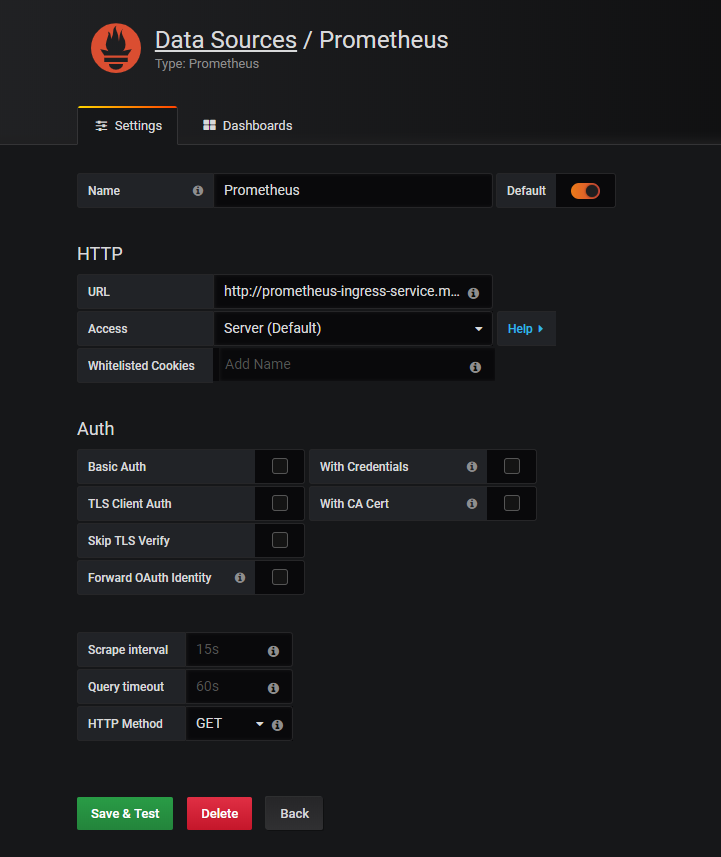

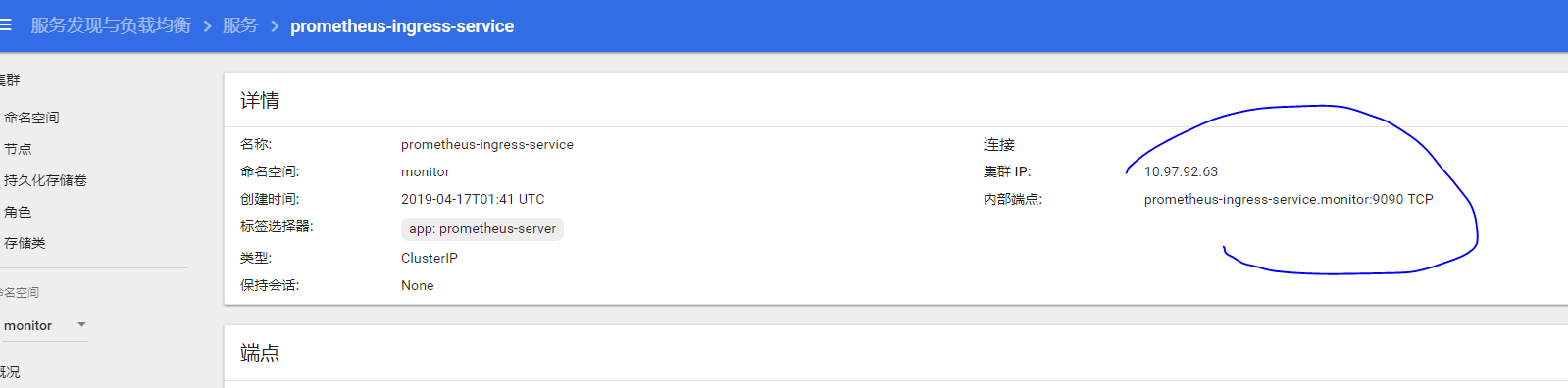

增加prometheus数据源,地址采用服务地址:

prometheus-ingress-service.monitor:9090

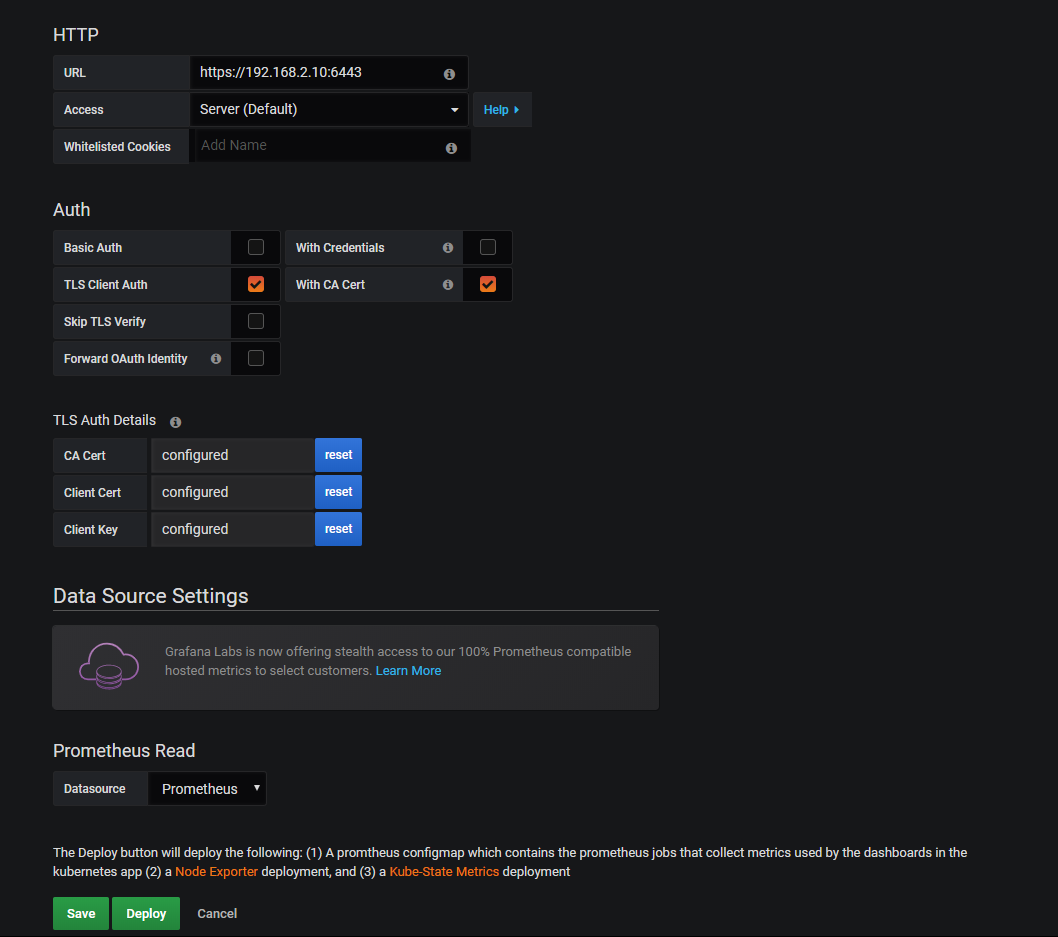

配置grafana-kubernetes-app插件

url为集群的apiserver地址,可以在kube-public里面看到此配置字典。

auth启用tls client auth和with ca cert

证书一般在/etc/kubernetes/pki下,分别为:ca.crt apiserver-kubelet-client.crt apiserver-kubelet-client.key

点击save,不要点击delploy

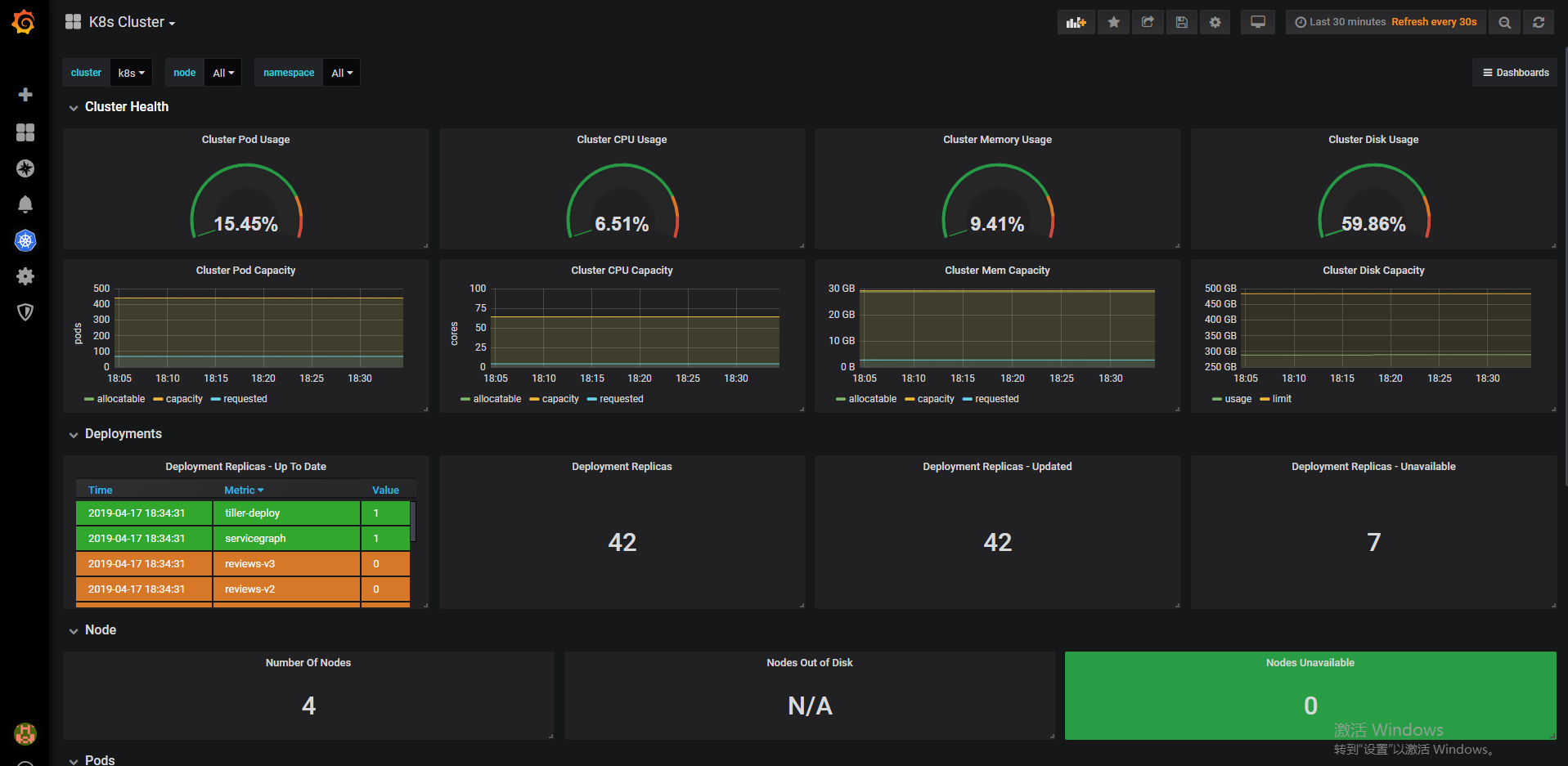

增加一些比较实用的模板:

315这个模板是cadvisor采集的各种指标的图表

1860这个模板是node-exporter采集的各种主机相关的指标的图表

6417 这个模板是kube-state-metrics采集的各种k8s资源对象的状态的图表

4859 和 4865 这两个模板是blackbox-exporter采集的服务的http状态指标的图表(两个效果基本一样,选择其一即可)

5345 这个模板是blackbox-exporter采集的服务的网络状态指标的图表

等待几分钟即可出图。