kubernetes升级到1.14.1

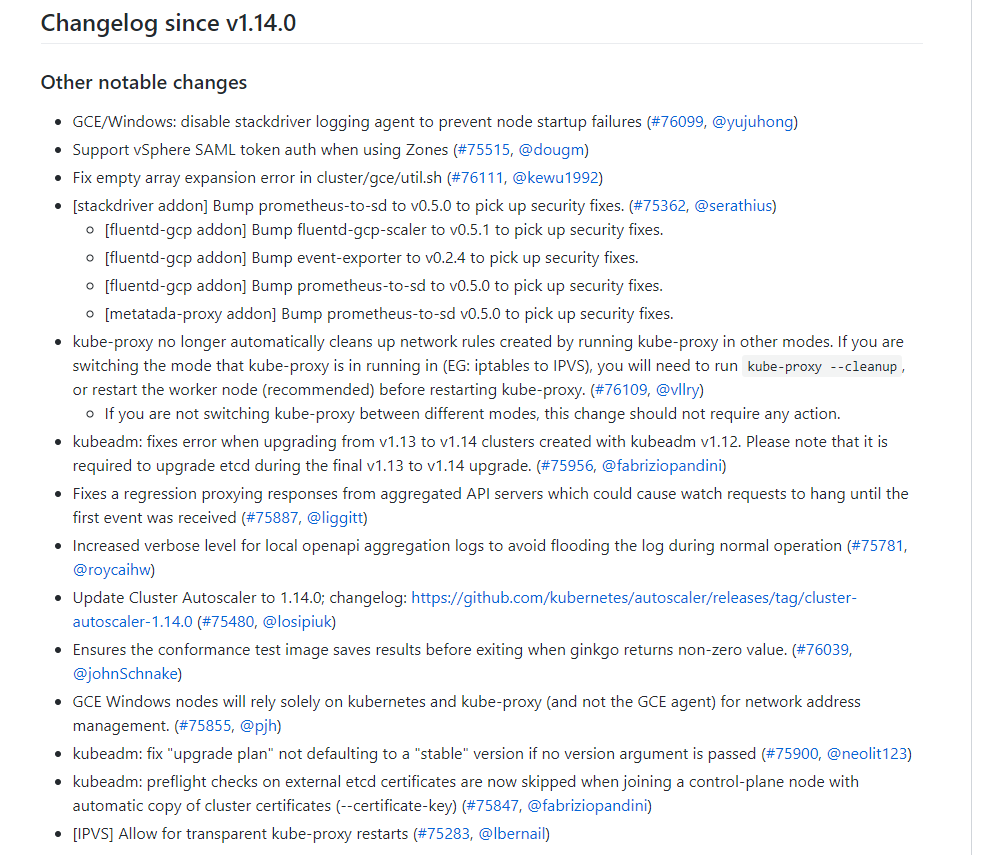

变化不大:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.14.md#v1141

升级kubeadm

先上代理:

export http_proxy='http://192.168.2.2:1080'

export https_proxy='http://192.168.2.2:1080'

export ftp_proxy='http://192.168.2.2:1080'

在各节点上都升级下kubelet kubeadm kubectl:

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

查看升级计划:

[root@node01 ~]# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.14.0

[upgrade/versions] kubeadm version: v1.14.1

[upgrade/versions] Latest stable version: v1.14.1

[upgrade/versions] FATAL: etcd cluster contains endpoints with mismatched versions: map[https://192.168.2.11:2379:3.3.10 https://192.168.2.12:2379:3.2.24 https://192.168.2.13:2379:3.2.24]

查看etcd集群,的确版本不一致:

2019-04-10 10:50:26.282303 W | etcdserver: the local etcd version 3.2.24 is not up-to-date

2019-04-10 10:50:26.282337 W | etcdserver: member 272dad16f2666b47 has a higher version 3.3.10

在各节点查看etcd实例配置文件/etc/kubernetes/manifests/etcd.yaml,发现有部分节点没更新:

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: etcd

tier: control-plane

name: etcd

namespace: kube-system

spec:

containers:

- command:

- etcd

- --advertise-client-urls=https://192.168.2.12:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --initial-advertise-peer-urls=https://192.168.2.12:2380

- --initial-cluster=node01=https://192.168.2.11:2380,node02=https://192.168.2.12:2380

- --initial-cluster-state=existing

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://192.168.2.12:2379

- --listen-peer-urls=https://192.168.2.12:2380

- --name=node02

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

image: k8s.gcr.io/etcd:3.2.24

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- /bin/sh

- -ec

- ETCDCTL_API=3 etcdctl --endpoints=https://[127.0.0.1]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key

get foo

failureThreshold: 8

initialDelaySeconds: 15

timeoutSeconds: 15

name: etcd

resources: {}

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

status: {}

更改版本号为3.3.10kubernetes自动重建pod:

[root@node01 ~]# kubeadm upgrade plan

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.14.0

[upgrade/versions] kubeadm version: v1.14.1

I0410 07:04:37.858133 81870 version.go:96] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable.txt": Get https://dl.k8s.io/release/stable.txt: net

/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)I0410 07:04:37.858210 81870 version.go:97] falling back to the local client version: v1.14.1

[upgrade/versions] Latest stable version: v1.14.1

I0410 07:04:47.938154 81870 version.go:96] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.14.txt": Get https://dl.k8s.io/release/stable-1.1

4.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)I0410 07:04:47.938207 81870 version.go:97] falling back to the local client version: v1.14.1

[upgrade/versions] Latest version in the v1.14 series: v1.14.1

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 1 x v1.14.0 v1.14.1

3 x v1.14.1 v1.14.1

Upgrade to the latest version in the v1.14 series:

COMPONENT CURRENT AVAILABLE

API Server v1.14.0 v1.14.1

Controller Manager v1.14.0 v1.14.1

Scheduler v1.14.0 v1.14.1

Kube Proxy v1.14.0 v1.14.1

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.14.1

_____________________________________________________________________

取镜像,在各节点执行

MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/openthings

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.1

docker pull ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-apiserver:v1.14.1 k8s.gcr.io/kube-apiserver:v1.14.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-scheduler:v1.14.1 k8s.gcr.io/kube-scheduler:v1.14.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-controller-manager:v1.14.1 k8s.gcr.io/kube-controller-manager:v1.14.1

docker tag ${MY_REGISTRY}/k8s-gcr-io-kube-proxy:v1.14.1 k8s.gcr.io/kube-proxy:v1.14.1

升级

[root@node01 etcd]# kubeadm upgrade apply v1.14.1

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade/version] You have chosen to change the cluster version to "v1.14.1"

[upgrade/versions] Cluster version: v1.14.0

[upgrade/versions] kubeadm version: v1.14.1

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 3 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.14.1"...

Static pod: kube-apiserver-node01 hash: 5e3444aa15c18e29c7476a1efc770c3e

Static pod: kube-controller-manager-node01 hash: 0ff88c9b6e64cded3762e51ff18bce90

Static pod: kube-scheduler-node01 hash: 58272442e226c838b193bbba4c44091e

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests752931960"

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-04-10-07-12-13/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-node01 hash: 5e3444aa15c18e29c7476a1efc770c3e

Static pod: kube-apiserver-node01 hash: 5e3444aa15c18e29c7476a1efc770c3e

Static pod: kube-apiserver-node01 hash: 62b27594033ef04996c1fb72709b03f9

[apiclient] Found 3 Pods for label selector component=kube-apiserver

[apiclient] Found 2 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-04-10-07-12-13/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-node01 hash: 0ff88c9b6e64cded3762e51ff18bce90

Static pod: kube-controller-manager-node01 hash: f4e6a574ceea76f0807a77e19a4d3b6c

[apiclient] Found 3 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-04-10-07-12-13/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-node01 hash: 58272442e226c838b193bbba4c44091e

Static pod: kube-scheduler-node01 hash: f44110a0ca540009109bfc32a7eb0baa

[apiclient] Found 3 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.14.1". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

已升级:

[root@node01 etcd]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

db Ready <none> 38d v1.14.1

node01 Ready master 76d v1.14.1

node02 Ready master 76d v1.14.1

node03 Ready master 76d v1.14.1